State of the TelemetryDeck Data Set

Over the last week, our servers were sometimes unable to calculate queries they were being sent. Here's why.

One thing I'm constantly working on is improving TelemetryDeck's query performance. We're still lacking in that regard, especially for very large data sets. This is getting better and better, but there is still so much to improve!

Query Calculation Durations

As you may know we use Apache Druid to store our signals database. Druid scales really well horizontally and is very capable and performant, but it has a LOT of knobs to twiddle and can be very sensitive to changes, especially when it runs inside a Kubernetes cluster as we do. Druid is between 10 and 50 of the workloads inside our cluster.

Something Broke

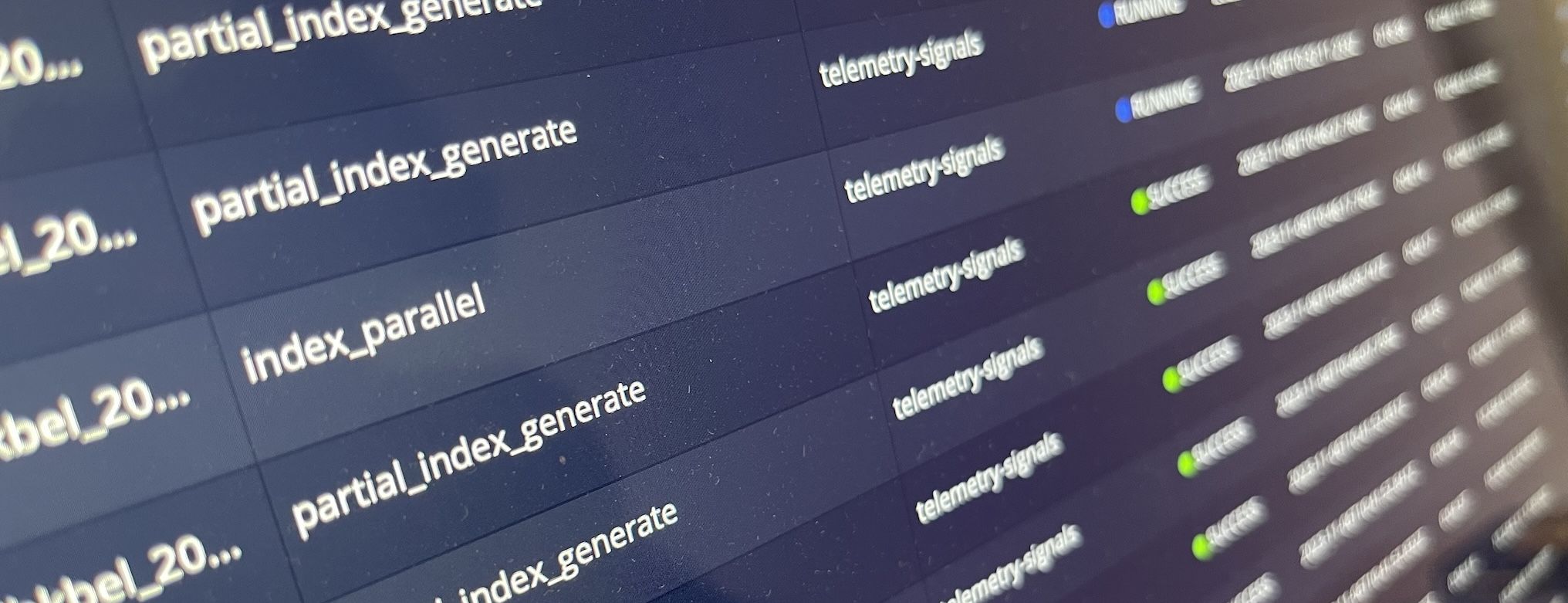

The mistake I made was trying to do multiple changes at once. I issued various Compaction Tasks (Druid's version of defragging, basically), while also updating the scaling settings to use much bigger machines (32 CPU cores, 128 Gigs or RAM), causing workloads to be shut down and others to restart. This crashed various tasks and left them in a broken in-between state.

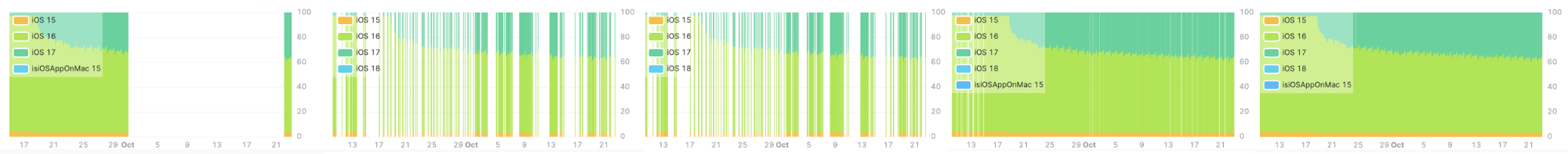

The servers answering queries are called Historicals. When a new Historical boots up, it requests all segments for the last 3 years from lukewarm storage – this takes about 6 hours because it's a lot of data, but it will load the data back to front, so it'll be able to contribute to queries way earlier, as soon as it has loaded 2 months or so.

The newly booted historicals worked perfectly until they finished most of their loading processes. Then they suddenly triggered errors like “Serialized Response Context too large”.

Let's fix it!

After several days of trying to clean the data and find the problem, it turned out there was a "thorny" segment somewhere between Sep 1 and Sep 15. Thorny in this context means that any query that touches the segment immediately fails with an error.

Even dropping the month of september did not work. I finally managed to first drop the month and then delete and rebuild each Historical one by one as to avoid further interruptions. So the queries work again and are un-thorny, but September was missing.

Next up I tried re-loading September from cold storage, but that failed multiple times. The thorny segment was also present in the worker servers responsible for re-ingesting cold storage data (the MiddleManagers), so now rebuild those next.

Annoyingly all those load data and drop data and manage data tasks take ages to run, even on very powerful hardware, because the dataset is BIG. Lots of files to load, rows to extract and transform, etc. So everything I try, it takes up to a day to see if it succeeds.

But – rebuilding the MiddleManagers did indeed work. I could now reload data from cold storage and add them back into the data set.

What did we learn?

Here's what I've learned: it was worth being paranoid about our data. Every time we had a down time or even data loss, I added additional precautions so that it couldn't happen any more. We have multiple redundant and tested levels of backup. The ingestion servers are hardened and tested and never refused to accept incoming data, even when the infrastructure behind them changed.

All this made it so that the last week was stressful for me and annoying for some customers, but there was no lasting damage. Phew! Now I finally can go back to working on more fun projects. Or maybe write a book about managing Apache Druid clusters with what I've learned.